Modifiers#

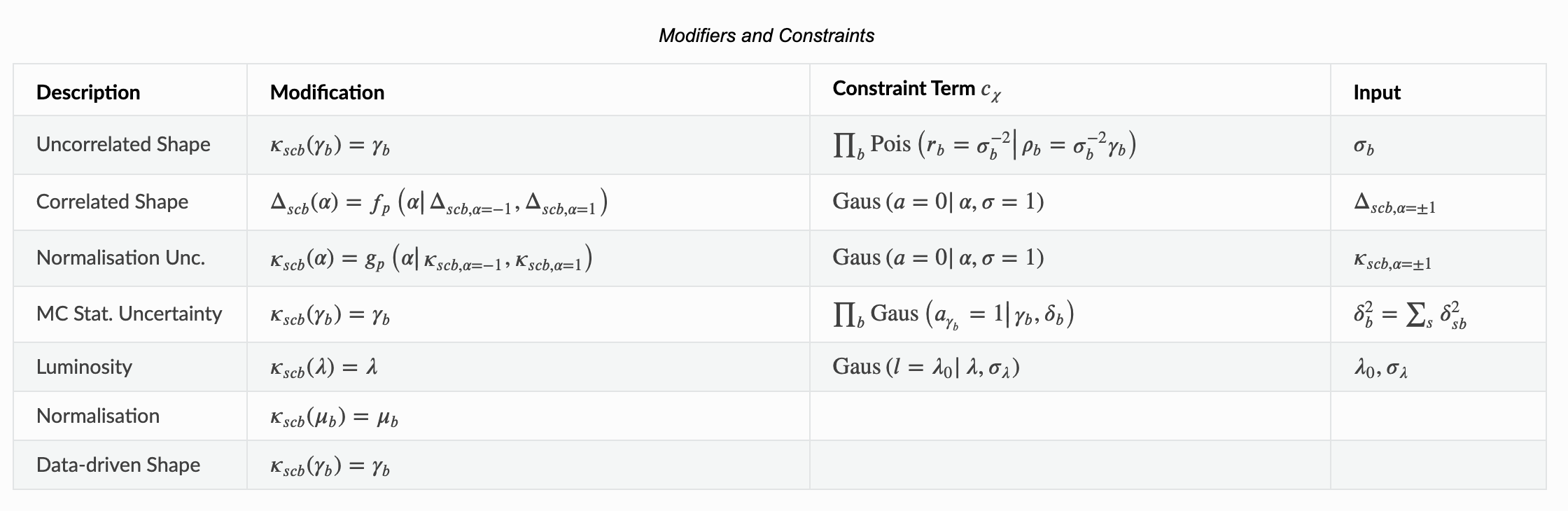

In our simple examples so far, we’ve only used two types of modifiers, but HistFactory allows for a handful of modifiers that have proven to be sufficient to model a wide range of uncertainties. Each of the modifiers is further described in the Modifiers section of the pyhf docs on model specification.

There is an addtional table of Modifiers and Constraints in the introduction of the pyhf documentation to use as reference.

In each of the sections below, we will explore the impact of modifiers on the data.

import ipywidgets as widgets

import pyhf

Unconstrained Normalisation (normfactor)#

This is a simple scaling of a sample (primarily used for signal strengths). Controlled by a single nuisance parameter.

model = pyhf.Model(

{

"channels": [

{

"name": "singlechannel",

"samples": [

{

"name": "signal",

"data": [5.0, 10.0],

"modifiers": [

{

"name": "my_normfactor",

"type": "normfactor",

"data": None,

}

],

}

],

}

]

},

poi_name=None,

)

print(f" aux data: {model.config.auxdata}")

print(f" nominal: {model.expected_data([1.0])}")

print(f"2x nominal: {model.expected_data([2.0])}")

print(f"3x nominal: {model.expected_data([3.0])}")

@widgets.interact(normfactor=(0, 10, 1))

def interact(normfactor=1):

print(f"normfactor = {normfactor:2d}: {model.expected_data([normfactor])}")

aux data: []

nominal: [ 5. 10.]

2x nominal: [10. 20.]

3x nominal: [15. 30.]

Example use case#

A normfactor modifier could be applied to scale the signal rate of a possible BSM signal or simultaneous in-situ measurements of background samples.

Normalisation Uncertainty (normsys)#

This is a simple constrained scaling of a sample representing the sample normalisation uncertainty, applied to every bin in the sample. Controlled by a single nuisance parameter.

model = pyhf.Model(

{

"channels": [

{

"name": "singlechannel",

"samples": [

{

"name": "signal",

"data": [5.0, 10.0],

"modifiers": [

{

"name": "my_normsys",

"type": "normsys",

"data": {"hi": 0.9, "lo": 1.1},

}

],

}

],

}

]

},

poi_name=None,

)

print(f" aux data: {model.config.auxdata}")

print(f" down: {model.expected_data([-1.0])}")

print(f" nominal: {model.expected_data([0.0])}")

print(f" up: {model.expected_data([1.0])}")

@widgets.interact(normsys=(-1, 1, 0.1))

def interact(normsys=0):

print(f"normsys = {normsys:4.1f}: {model.expected_data([normsys])}")

aux data: [0.0]

down: [ 5.5 11. -1. ]

nominal: [ 5. 10. 0.]

up: [4.5 9. 1. ]

What do you think happens if we switch "hi" and "lo"?

Example use case#

A normsys modifier could represent part of the uncertainty associated with a jet energy scale or a jet energy resolution (which can contribute both a normsys and a histosys to a sample).

MC Statistical Uncertainty (staterror)#

A staterror modifier is applied as a set of bin-wise scale factors to each sample. This is used to model the uncertainty in the bins due to Monte Carlo statistics. Controlled by \(n\) nuisance parameters (one for each bin).

In particular, this tends to be correlated across samples as they’re usually modeled by the same MC generator. Unlike shapesys which is constrained by a Poisson, this modifier is constrained by a Gaussian.

model = pyhf.Model(

{

"channels": [

{

"name": "singlechannel",

"samples": [

{

"name": "signal",

"data": [5.0, 10.0],

"modifiers": [

{

"name": "my_staterror",

"type": "staterror",

"data": [1.0, 2.0],

}

],

}

],

}

]

},

poi_name=None,

)

print(f"aux data: {model.config.auxdata}")

print(f"(1x, 1x): {model.expected_data([1.0, 1.0])}")

print(f"(2x, 2x): {model.expected_data([2.0, 2.0])}")

print(f"(3x, 3x): {model.expected_data([3.0, 3.0])}")

@widgets.interact(staterror_0=(0, 10, 1), staterror_1=(0, 10, 1))

def interact(staterror_0=1, staterror_1=1):

print(

f"staterror = ({staterror_0:2d}, {staterror_1:2d}): {model.expected_data([staterror_0, staterror_1])}"

)

aux data: [1.0, 1.0]

(1x, 1x): [ 5. 10. 1. 1.]

(2x, 2x): [10. 20. 2. 2.]

(3x, 3x): [15. 30. 3. 3.]

This looks/feels a lot like shapesys. Is there a difference in the expected data? What happens to the data for the staterror? (Hint: it has to do with the Gaussian constraint.)

Example use case#

A staterror modifier represents the inherent statistical uncertainty due to the finite sample size of Monte Carlo simulations of physics processes.

Luminosity (lumi)#

A lumi modifier is applied as a global scale factor across all samples in a channel and sample boundaries. Sample rates derived from theory calculations, as opposed to data-driven estimates (shapefactor), are scaled to the integrated luminosity corresponding to the observed data. Controlled by a single nuisance parameter.

model = pyhf.Model(

{

"channels": [

{

"name": "singlechannel",

"samples": [

{

"name": "signal",

"data": [5.0, 10.0],

"modifiers": [{"name": "lumi", "type": "lumi", "data": None}],

}

],

}

],

"parameters": [

{

"name": "lumi",

"auxdata": [1.0],

"sigmas": [0.017],

"bounds": [[0.915, 1.085]],

"inits": [1.0],

}

],

},

poi_name=None,

)

print(f"aux data: {model.config.auxdata}")

print(f"(1x, 1x): {model.expected_data([1.0, 1.0])}")

print(f"(2x, 2x): {model.expected_data([2.0, 2.0])}")

print(f"(3x, 3x): {model.expected_data([3.0, 3.0])}")

@widgets.interact(lumi=(0, 10, 1))

def interact(lumi=1):

print(f"lumi = {lumi:2d}: {model.expected_data([lumi])}")

aux data: [1.0]

(1x, 1x): [ 5. 10. 1.]

(2x, 2x): [10. 20. 2.]

(3x, 3x): [15. 30. 3.]

Example use case#

A lumi modifier represents the uncertainty of luminosity measurement applied to the samples.

Data-driven Shape (shapefactor)#

A shapefactor modifier is applied as an unconstrained, bin-wise multipiciative factor and represents a data-driven estimation of sample rates (e.g. think of estimating multi-jet backgrounds). Controlled by \(n\) nuisance parameters (one for each bin).

This feels like a bin-wise normfactor.

model = pyhf.Model(

{

"channels": [

{

"name": "singlechannel",

"samples": [

{

"name": "signal",

"data": [5.0, 10.0],

"modifiers": [

{

"name": "my_shapefactor",

"type": "shapefactor",

"data": None,

}

],

}

],

}

]

},

poi_name=None,

)

print(f"aux data: {model.config.auxdata}")

print(f"(1x, 1x): {model.expected_data([1.0, 1.0])}")

print(f"(2x, 2x): {model.expected_data([2.0, 2.0])}")

print(f"(3x, 3x): {model.expected_data([3.0, 3.0])}")

@widgets.interact(shapefactor_0=(0, 10, 1), shapefactor_1=(0, 10, 1))

def interact(shapefactor_0=1, shapefactor_1=1):

print(

f"shapefactor = ({shapefactor_0:2d}, {shapefactor_1:2d}): {model.expected_data([shapefactor_0, shapefactor_1])}"

)

aux data: []

(1x, 1x): [ 5. 10.]

(2x, 2x): [10. 20.]

(3x, 3x): [15. 30.]

Example use case#

A shapefactor modifier could represent a scale factor applied to multijet backgrounds derived from measurements in data.

Correlating Modifiers#

Like in HistFactory, modifiers are controlled by parameters which are named based on the name you assign to the modifier. Therefore, as long as the modifiers you want to correlate are “mostly” compatible (e.g. same number of nuisance parameters allocated), you can correlate them!

Let’s repeat the normsys example, and add in a histosys uncertainty — both controlled by a shared single nuisance parameter.

model = pyhf.Model(

{

"channels": [

{

"name": "singlechannel",

"samples": [

{

"name": "signal",

"data": [5.0, 10.0],

"modifiers": [

{

"name": "shared_parameter",

"type": "normsys",

"data": {"hi": 0.9, "lo": 1.1},

},

{

"name": "shared_parameter",

"type": "histosys",

"data": {"hi_data": [15, 22], "lo_data": [5, 18]},

},

],

}

],

}

]

},

poi_name=None,

)

print(f" aux data: {model.config.auxdata}")

print(f" down: {model.expected_data([-1.0])}")

print(f" nominal: {model.expected_data([0.0])}")

print(f" up: {model.expected_data([1.0])}")

@widgets.interact(shared_parameter=(-1, 1, 0.1))

def interact(shared_parameter=0):

print(

f"shared_parameter = {shared_parameter:4.1f}: {model.expected_data([shared_parameter])}"

)

aux data: [0.0]

down: [ 5.5 19.8 -1. ]

nominal: [ 5. 10. 0.]

up: [13.5 19.8 1. ]

We’re seeing the impact of a multiplicative bin-wise correlated shape modification convoluted with an additive normalization uncertainty — both constrained by a Gaussian.