Using HEPData

Contents

Using HEPData¶

import json

import pyhf

import pyhf.contrib.utils

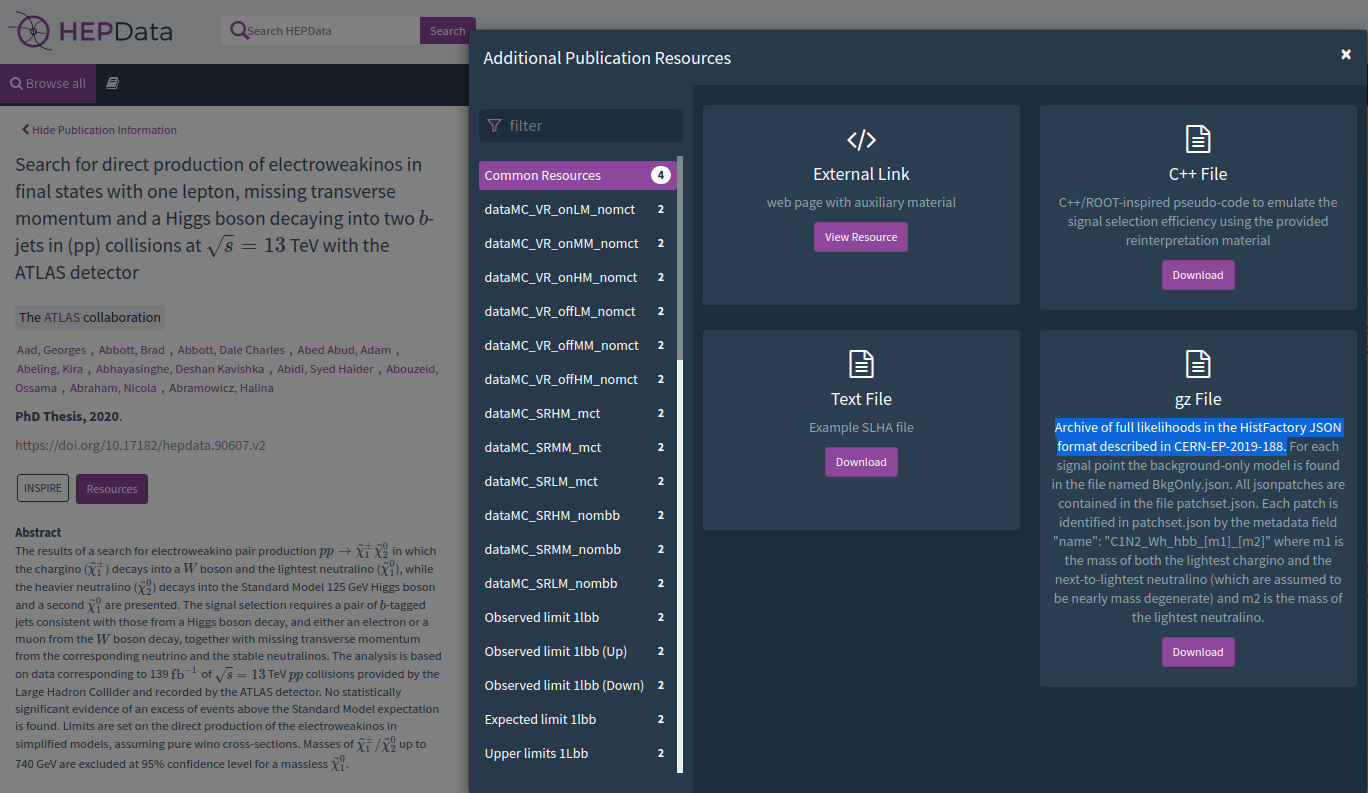

Preserved on HEPData¶

As of this tutorial, ATLAS has published 18 full statistical models to HEPData

Let’s explore the 1Lbb workspace a little bit shall we?

Getting the Data¶

We’ll use the pyhf[contrib] extra (which relies on requests and tarfile) to download the HEPData minted DOI and extract the files we need.

pyhf.contrib.utils.download(

"https://doi.org/10.17182/hepdata.90607.v3/r3", "1Lbb-likelihoods"

)

This will nicely download and extract everything we need.

!ls -lavh 1Lbb-likelihoods

total 59M

drwxr-xr-x 2 root root 4.0K Jul 20 16:27 .

drwxr-xr-x 6 root root 4.0K Jul 20 16:27 ..

-rw-r--r-- 1 1000 1000 4.3M May 7 2020 BkgOnly.json

-rw-r--r-- 1 1000 1000 1.4K May 30 2020 README.md

-rw-r--r-- 1 1000 1000 55M May 31 2020 patchset.json

Instantiate our objects¶

We have a background-only workspace BkgOnly.json and a signal patchset collection patchset.json. Let’s create our python objects and play with them:

spec = json.load(open("1Lbb-likelihoods/BkgOnly.json"))

patchset = pyhf.PatchSet(json.load(open("1Lbb-likelihoods/patchset.json")))

So what did the analyzers give us for signal patches?

Patching in Signals¶

Let’s look at this pyhf.PatchSet object which provides a user-friendly way to interact with many signal patches at once.

PatchSet¶

patchset

<pyhf.patchset.PatchSet object with 125 patches at 0x7fc421f48b20>

Oh wow, we’ve got 125 patches. What information does it have?

print(f"description: {patchset.description}")

print(f" digests: {patchset.digests}")

print(f" labels: {patchset.labels}")

print(f" references: {patchset.references}")

print(f" version: {patchset.version}")

description: signal patchset for the SUSY EWK 1Lbb analysis

digests: {'sha256': '2563672e1a165384faf49f1431e921d88c9c07ec10f150d5045576564f98f820'}

labels: ['m1', 'm2']

references: {'hepdata': 'ins1755298'}

version: 1.0.0

So we’ve got a useful description of the signal patches… there’s a digest. Does that match the background-only workspace we have?

pyhf.utils.digest(spec)

'2563672e1a165384faf49f1431e921d88c9c07ec10f150d5045576564f98f820'

It does! In fact, this sort of verification check will be done automatically when applying patches using pyhf.PatchSet as we will see shortly. To manually verify, simply run pyhf.PatchSet.verify on the workspace. No error means everything is fine. It will loudly complain otherwise.

patchset.verify(spec)

No error, whew. Let’s move on.

The labels m1 and m2 tells us that we have the signal patches parametrized in 2-dimensional space, likely as \(m_1 = \tilde{\chi}_1^\pm\) and \(m_2 = \tilde{\chi}_1^0\)… but I guess we’ll see?

The references list the references for this dataset, which is pointing at the hepdata record for now.

Next, the version is the version of the schema set we’re using with pyhf (1.0.0).

And last, but certainly not least… its patches:

patchset.patches

[<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_0(1000, 0)' at 0x7fc45ec232b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_100(1000, 100)' at 0x7fc421f3ea30>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_150(1000, 150)' at 0x7fc421f3e820>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_200(1000, 200)' at 0x7fc421f3e7c0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_250(1000, 250)' at 0x7fc4220cc340>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_300(1000, 300)' at 0x7fc421fabe80>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_350(1000, 350)' at 0x7fc421fabd60>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_400(1000, 400)' at 0x7fc421fabd90>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_1000_50(1000, 50)' at 0x7fc458551cd0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_150_0(150, 0)' at 0x7fc458551400>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_165_35(165, 35)' at 0x7fc4221339d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_175_0(175, 0)' at 0x7fc45855c160>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_175_25(175, 25)' at 0x7fc45855c9a0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_190_60(190, 60)' at 0x7fc45855c790>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_200_0(200, 0)' at 0x7fc45855c8b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_200_25(200, 25)' at 0x7fc4584e17f0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_200_50(200, 50)' at 0x7fc4584e13a0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_225_0(225, 0)' at 0x7fc4584e1a60>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_225_25(225, 25)' at 0x7fc4584e1280>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_225_50(225, 50)' at 0x7fc421f66700>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_250_0(250, 0)' at 0x7fc421f661c0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_250_100(250, 100)' at 0x7fc421f661f0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_250_25(250, 25)' at 0x7fc421f66bb0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_250_50(250, 50)' at 0x7fc421f66a30>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_250_75(250, 75)' at 0x7fc421f66280>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_275_0(275, 0)' at 0x7fc421f66760>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_275_25(275, 25)' at 0x7fc421f669a0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_275_50(275, 50)' at 0x7fc421f663a0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_275_75(275, 75)' at 0x7fc421f66520>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_300_0(300, 0)' at 0x7fc421f66790>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_300_150(300, 150)' at 0x7fc421f66b80>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_300_25(300, 25)' at 0x7fc421f66af0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_300_50(300, 50)' at 0x7fc421f66d00>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_300_75(300, 75)' at 0x7fc421f660a0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_325_0(325, 0)' at 0x7fc421f66130>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_325_50(325, 50)' at 0x7fc421f66190>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_350_0(350, 0)' at 0x7fc421f669d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_350_100(350, 100)' at 0x7fc421f668b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_350_150(350, 150)' at 0x7fc421f668e0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_350_200(350, 200)' at 0x7fc421f66910>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_350_25(350, 25)' at 0x7fc421f66220>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_350_50(350, 50)' at 0x7fc421f66370>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_350_75(350, 75)' at 0x7fc421f66430>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_375_0(375, 0)' at 0x7fc421f66b20>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_375_50(375, 50)' at 0x7fc421f66c70>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_400_0(400, 0)' at 0x7fc421f66d90>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_400_100(400, 100)' at 0x7fc421f66dc0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_400_150(400, 150)' at 0x7fc421f66e50>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_400_200(400, 200)' at 0x7fc421f66eb0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_400_25(400, 25)' at 0x7fc421f66f10>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_400_250(400, 250)' at 0x7fc421f66f70>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_400_50(400, 50)' at 0x7fc421f66fd0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_425_0(425, 0)' at 0x7fc418a35070>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_450_0(450, 0)' at 0x7fc418a350d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_450_100(450, 100)' at 0x7fc418a35130>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_450_150(450, 150)' at 0x7fc418a35190>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_450_200(450, 200)' at 0x7fc418a351f0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_450_250(450, 250)' at 0x7fc418a35250>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_450_300(450, 300)' at 0x7fc418a352b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_450_50(450, 50)' at 0x7fc418a35310>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_500_0(500, 0)' at 0x7fc418a35370>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_500_100(500, 100)' at 0x7fc418a353d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_500_150(500, 150)' at 0x7fc418a35430>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_500_200(500, 200)' at 0x7fc418a35490>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_500_250(500, 250)' at 0x7fc418a354f0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_500_300(500, 300)' at 0x7fc418a35550>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_500_350(500, 350)' at 0x7fc418a355b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_500_50(500, 50)' at 0x7fc418a35610>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_535_400(535, 400)' at 0x7fc418a35670>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_550_0(550, 0)' at 0x7fc418a356d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_550_100(550, 100)' at 0x7fc418a35730>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_550_150(550, 150)' at 0x7fc418a35790>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_550_200(550, 200)' at 0x7fc418a357f0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_550_250(550, 250)' at 0x7fc418a35850>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_550_300(550, 300)' at 0x7fc418a358b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_550_50(550, 50)' at 0x7fc418a35910>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_0(600, 0)' at 0x7fc418a35970>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_100(600, 100)' at 0x7fc418a359d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_150(600, 150)' at 0x7fc418a35a30>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_200(600, 200)' at 0x7fc418a35a90>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_250(600, 250)' at 0x7fc418a35af0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_300(600, 300)' at 0x7fc418a35b50>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_350(600, 350)' at 0x7fc418a35bb0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_400(600, 400)' at 0x7fc418a35c10>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_600_50(600, 50)' at 0x7fc418a35c70>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_650_0(650, 0)' at 0x7fc418a35cd0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_650_100(650, 100)' at 0x7fc418a35d30>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_650_150(650, 150)' at 0x7fc418a35d90>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_650_200(650, 200)' at 0x7fc418a35df0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_650_250(650, 250)' at 0x7fc418a35e50>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_650_300(650, 300)' at 0x7fc418a35eb0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_650_50(650, 50)' at 0x7fc418a35f10>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_0(700, 0)' at 0x7fc418a35f70>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_100(700, 100)' at 0x7fc418a35fd0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_150(700, 150)' at 0x7fc418a37070>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_200(700, 200)' at 0x7fc418a370d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_250(700, 250)' at 0x7fc418a37130>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_300(700, 300)' at 0x7fc418a37190>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_350(700, 350)' at 0x7fc418a371f0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_400(700, 400)' at 0x7fc418a37250>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_700_50(700, 50)' at 0x7fc418a372b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_750_100(750, 100)' at 0x7fc418a37310>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_750_150(750, 150)' at 0x7fc418a37370>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_750_200(750, 200)' at 0x7fc418a373d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_750_250(750, 250)' at 0x7fc418a37430>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_750_300(750, 300)' at 0x7fc418a37490>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_750_50(750, 50)' at 0x7fc418a374f0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_0(800, 0)' at 0x7fc418a37550>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_100(800, 100)' at 0x7fc418a375b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_150(800, 150)' at 0x7fc418a37610>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_200(800, 200)' at 0x7fc418a37670>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_250(800, 250)' at 0x7fc418a376d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_300(800, 300)' at 0x7fc418a37730>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_350(800, 350)' at 0x7fc418a37790>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_400(800, 400)' at 0x7fc418a377f0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_800_50(800, 50)' at 0x7fc418a37850>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_0(900, 0)' at 0x7fc418a378b0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_100(900, 100)' at 0x7fc418a37910>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_150(900, 150)' at 0x7fc418a37970>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_200(900, 200)' at 0x7fc418a379d0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_250(900, 250)' at 0x7fc418a37a30>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_300(900, 300)' at 0x7fc418a37a90>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_350(900, 350)' at 0x7fc418a37af0>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_400(900, 400)' at 0x7fc418a37b50>,

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_50(900, 50)' at 0x7fc418a37bb0>]

So we can see all the patches listed both by name such as C1N2_Wh_hbb_900_250 as well as a pair of points (900, 250). Why is this useful? The PatchSet object acts like a special dictionary look-up where it will grab the patch you need based on the unique key you provide it.

For example, we can look up by name

patchset["C1N2_Wh_hbb_900_250"]

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_250(900, 250)' at 0x7fc418a37a30>

or by the pair of points

patchset[(900, 250)]

<pyhf.patchset.Patch object 'C1N2_Wh_hbb_900_250(900, 250)' at 0x7fc418a37a30>

Patches¶

A pyhf.PatchSet is a collection of pyhf.Patch objects. What is a patch indeed? It contains enough information about how to apply the signal patch to the corresponding background-only workspace (matched by digest).

patch = patchset["C1N2_Wh_hbb_900_250"]

print(f" name: {patch.name}")

print(f"values: {patch.values}")

name: C1N2_Wh_hbb_900_250

values: (900, 250)

Most importantly, it contains the patch information itself. Specifically, this inherits from the jsonpatch.JsonPatch object, which is a 3rd party module providing native support for json patching in python. That means we can simply apply the patch to our workspace directly!

print(f" samples pre-patch: {pyhf.Workspace(spec).samples}")

print(f"samples post-patch: {pyhf.Workspace(patch.apply(spec)).samples}")

samples pre-patch: ['diboson', 'multiboson', 'singletop', 'ttbar', 'tth', 'ttv', 'vh', 'wjets', 'zjets']

samples post-patch: ['C1N2_Wh_hbb_900_250', 'diboson', 'multiboson', 'singletop', 'ttbar', 'tth', 'ttv', 'vh', 'wjets', 'zjets']

Or, more quickly, from the PatchSet object:

print(f" samples pre-patch: {pyhf.Workspace(spec).samples}")

print(f"samples post-patch: {pyhf.Workspace(patchset.apply(spec, (900, 250))).samples}")

samples pre-patch: ['diboson', 'multiboson', 'singletop', 'ttbar', 'tth', 'ttv', 'vh', 'wjets', 'zjets']

samples post-patch: ['C1N2_Wh_hbb_900_250', 'diboson', 'multiboson', 'singletop', 'ttbar', 'tth', 'ttv', 'vh', 'wjets', 'zjets']

Patching via Model Creation¶

One last way to apply the patching is to, instead of patching workspaces, we patch the models as we build them from the background-only workspace. This maybe makes it easier to treat the background-only workspace as immutable, and patch in signal models when grabbing the model. Check it out.

workspace = pyhf.Workspace(spec)

First, load up our background-only spec into the workspace. Then let’s create a model.

model = workspace.model(patches=[patchset["C1N2_Wh_hbb_900_250"]])

print(f"samples (workspace): {workspace.samples}")

print(f"samples ( model ): {model.config.samples}")

samples (workspace): ['diboson', 'multiboson', 'singletop', 'ttbar', 'tth', 'ttv', 'vh', 'wjets', 'zjets']

samples ( model ): ['C1N2_Wh_hbb_900_250', 'diboson', 'multiboson', 'singletop', 'ttbar', 'tth', 'ttv', 'vh', 'wjets', 'zjets']

Doing Physics¶

So we want to try and reproduce part of the contour. At least convince ourselves we’re doing physics and not fauxsics. … Anyway… Let’s remind ourselves of the 1Lbb contour as we don’t have the photographic memory of the ATLAS SUSY conveners

So let’s work around the 700-900 GeV \(\tilde{\chi}_1^\pm, \tilde{\chi}_2^0\) region. We’ll look at two points here:

C1N2_Wh_hbb_650_0(650, 0)which is below the contour and excludedC1N2_Wh_hbb_1000_0(1000, 0)which is above the contour and not excluded

Let’s perform a “standard” hypothesis test (with \(\mu = 1\) null BSM hypothesis) on both of these and use the \(\text{CL}_\text{s}\) values to convince ourselves that we just did reproducible physics!?!

Doing Physics, for real now¶

model_below = workspace.model(patches=[patchset["C1N2_Wh_hbb_650_0"]])

model_above = workspace.model(patches=[patchset["C1N2_Wh_hbb_1000_0"]])

We’ve made our models. Let’s test hypotheses!

Note: this will not be as instantaneous as our simple models…but it should still be pretty fast!

test_poi = 1.0

result_below = pyhf.infer.hypotest(

test_poi,

workspace.data(model_below),

model_below,

test_stat="qtilde",

return_expected_set=True,

)

print(f"Observed CLs: {result_below[0]}")

print(f"Expected CLs band: {[exp.tolist() for exp in result_below[1]]}")

/__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/tensor/numpy_backend.py:352: RuntimeWarning: invalid value encountered in log

return n * np.log(lam) - lam - gammaln(n + 1.0)

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

Input In [18], in <cell line: 2>()

1 test_poi = 1.0

----> 2 result_below = pyhf.infer.hypotest(

3 test_poi,

4 workspace.data(model_below),

5 model_below,

6 test_stat="qtilde",

7 return_expected_set=True,

8 )

10 print(f"Observed CLs: {result_below[0]}")

11 print(f"Expected CLs band: {[exp.tolist() for exp in result_below[1]]}")

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/infer/__init__.py:159, in hypotest(poi_test, data, pdf, init_pars, par_bounds, fixed_params, calctype, return_tail_probs, return_expected, return_expected_set, **kwargs)

147 _check_hypotest_prerequisites(pdf, data, init_pars, par_bounds, fixed_params)

149 calc = utils.create_calculator(

150 calctype,

151 data,

(...)

156 **kwargs,

157 )

--> 159 teststat = calc.teststatistic(poi_test)

160 sig_plus_bkg_distribution, bkg_only_distribution = calc.distributions(poi_test)

162 tb, _ = get_backend()

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/infer/calculators.py:348, in AsymptoticCalculator.teststatistic(self, poi_test)

338 asimov_mu = 1.0 if self.test_stat == 'q0' else 0.0

340 asimov_data = generate_asimov_data(

341 asimov_mu,

342 self.data,

(...)

346 self.fixed_params,

347 )

--> 348 qmuA_v = teststat_func(

349 poi_test,

350 asimov_data,

351 self.pdf,

352 self.init_pars,

353 self.par_bounds,

354 self.fixed_params,

355 )

356 self.sqrtqmuA_v = tensorlib.sqrt(qmuA_v)

358 if self.test_stat in ["q", "q0"]: # qmu or q0

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/infer/test_statistics.py:189, in qmu_tilde(mu, data, pdf, init_pars, par_bounds, fixed_params)

184 if par_bounds[pdf.config.poi_index][0] != 0:

185 log.warning(

186 'qmu_tilde test statistic used for fit configuration with POI not bounded at zero.\n'

187 + 'Use the qmu test statistic (pyhf.infer.test_statistics.qmu) instead.'

188 )

--> 189 return _qmu_like(mu, data, pdf, init_pars, par_bounds, fixed_params)

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/infer/test_statistics.py:25, in _qmu_like(mu, data, pdf, init_pars, par_bounds, fixed_params)

17 """

18 Clipped version of _tmu_like where the returned test statistic

19 is 0 if muhat > 0 else tmu_like_stat.

(...)

22 qmu_tilde. Otherwise this is qmu (no tilde).

23 """

24 tensorlib, optimizer = get_backend()

---> 25 tmu_like_stat, (_, muhatbhat) = _tmu_like(

26 mu, data, pdf, init_pars, par_bounds, fixed_params, return_fitted_pars=True

27 )

28 qmu_like_stat = tensorlib.where(

29 muhatbhat[pdf.config.poi_index] > mu, tensorlib.astensor(0.0), tmu_like_stat

30 )

31 return qmu_like_stat

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/infer/test_statistics.py:47, in _tmu_like(mu, data, pdf, init_pars, par_bounds, fixed_params, return_fitted_pars)

43 tensorlib, optimizer = get_backend()

44 mubhathat, fixed_poi_fit_lhood_val = fixed_poi_fit(

45 mu, data, pdf, init_pars, par_bounds, fixed_params, return_fitted_val=True

46 )

---> 47 muhatbhat, unconstrained_fit_lhood_val = fit(

48 data, pdf, init_pars, par_bounds, fixed_params, return_fitted_val=True

49 )

50 log_likelihood_ratio = fixed_poi_fit_lhood_val - unconstrained_fit_lhood_val

51 tmu_like_stat = tensorlib.astensor(

52 tensorlib.clip(log_likelihood_ratio, 0.0, max_value=None)

53 )

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/infer/mle.py:131, in fit(data, pdf, init_pars, par_bounds, fixed_params, **kwargs)

124 # get fixed vals from the model

125 fixed_vals = [

126 (index, init)

127 for index, (init, is_fixed) in enumerate(zip(init_pars, fixed_params))

128 if is_fixed

129 ]

--> 131 return opt.minimize(

132 twice_nll, data, pdf, init_pars, par_bounds, fixed_vals, **kwargs

133 )

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/optimize/mixins.py:184, in OptimizerMixin.minimize(self, objective, data, pdf, init_pars, par_bounds, fixed_vals, return_fitted_val, return_result_obj, return_uncertainties, return_correlations, do_grad, do_stitch, **kwargs)

181 par_names[index] = None

182 par_names = [name for name in par_names if name]

--> 184 result = self._internal_minimize(

185 **minimizer_kwargs, options=kwargs, par_names=par_names

186 )

187 result = self._internal_postprocess(

188 result, stitch_pars, return_uncertainties=return_uncertainties

189 )

191 _returns = [result.x]

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/optimize/mixins.py:50, in OptimizerMixin._internal_minimize(self, func, x0, do_grad, bounds, fixed_vals, options, par_names)

31 def _internal_minimize(

32 self,

33 func,

(...)

39 par_names=None,

40 ):

42 minimizer = self._get_minimizer(

43 func,

44 x0,

(...)

48 par_names=par_names,

49 )

---> 50 result = self._minimize(

51 minimizer,

52 func,

53 x0,

54 do_grad=do_grad,

55 bounds=bounds,

56 fixed_vals=fixed_vals,

57 options=options,

58 )

60 try:

61 assert result.success

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/pyhf/optimize/opt_scipy.py:93, in scipy_optimizer._minimize(self, minimizer, func, x0, do_grad, bounds, fixed_vals, options)

90 else:

91 constraints = []

---> 93 return minimizer(

94 func,

95 x0,

96 method=method,

97 jac=do_grad,

98 bounds=bounds,

99 constraints=constraints,

100 tol=tolerance,

101 options=dict(maxiter=maxiter, disp=bool(verbose), **solver_options),

102 )

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/scipy/optimize/_minimize.py:701, in minimize(fun, x0, args, method, jac, hess, hessp, bounds, constraints, tol, callback, options)

698 res = _minimize_cobyla(fun, x0, args, constraints, callback=callback,

699 **options)

700 elif meth == 'slsqp':

--> 701 res = _minimize_slsqp(fun, x0, args, jac, bounds,

702 constraints, callback=callback, **options)

703 elif meth == 'trust-constr':

704 res = _minimize_trustregion_constr(fun, x0, args, jac, hess, hessp,

705 bounds, constraints,

706 callback=callback, **options)

File /__t/Python/3.9.13/x64/lib/python3.9/site-packages/scipy/optimize/_slsqp_py.py:422, in _minimize_slsqp(func, x0, args, jac, bounds, constraints, maxiter, ftol, iprint, disp, eps, callback, finite_diff_rel_step, **unknown_options)

418 a = _eval_con_normals(x, cons, la, n, m, meq, mieq)

420 while 1:

421 # Call SLSQP

--> 422 slsqp(m, meq, x, xl, xu, fx, c, g, a, acc, majiter, mode, w, jw,

423 alpha, f0, gs, h1, h2, h3, h4, t, t0, tol,

424 iexact, incons, ireset, itermx, line,

425 n1, n2, n3)

427 if mode == 1: # objective and constraint evaluation required

428 fx = wrapped_fun(x)

KeyboardInterrupt:

result_above = pyhf.infer.hypotest(

test_poi,

workspace.data(model_above),

model_above,

test_stat="qtilde",

return_expected_set=True,

)

print(f"Observed CLs: {result_above[0]}")

print(f"Expected CLs band: {[exp.tolist() for exp in result_above[1]]}")

And as you can see, we’re getting results that we generally expect. Excluded models are those for which \(\text{CL}_\text{s} < 0.05\). Additionally, you can see that the expected bands \(-2\sigma\) for the \((1000, 0)\) point is just slightly below the observed result for the \((650, 0)\) point which is what we observe in the figure above.